- Introduction to Linear Regression

- Data

- Data Splitting

- Model Specification

- Fitting to Training Data

- Evaluating Test Set Accuracy

- Creating a Machine Learning Workflow

- Step 1. Split Our Data

- Step 2. Feature Engineering

- Step 3. Specify a Model

- Step 4. Create a Workflow

- Step 5. Execute the Workflow

- Workflow for Home Selling Price

- Step 1. Split Our Data

- Step 2. Feature Engineering

- Step 3. Specify a Model

- Step 4. Create a Workflow

- Step 5. Execute the Workflow

Linear Regression with tidymodels

In this tutorial, we will learn about linear regression with

tidymodels. We will start by fitting a linear regression

model to the advertising data set that is used throughout

chapter 3 of our course textbook, An

Introduction to Statistical Learning.

Then we will focus on building our first machine learning pipeline,

with data resampling, featuring engineering, modeling fitting, and model

accuracy assessment using the workflows,

rsample, recipes, parsnip, and

tune packages from tidymodels.

Click the button below to clone the course R tutorials into your DataCamp Workspace. DataCamp Workspace is a free computation environment that allows for execution of R and Python notebooks. Note - you will only have to do this once since all tutorials are included in the DataCamp Workspace GBUS 738 project.

Introduction to Linear Regression

The R code below will load the data and packages we will

be working with throughout this tutorial. The vip package

is used for exploring predictor variable importance. We will use this

package for visualizing which predictors have the most predictive power

in our linear regression models.

# Load libraries

library(tidymodels)

library(vip) # for variable importance

# Load data sets

advertising <- readRDS(url('https://gmubusinessanalytics.netlify.app/data/advertising.rds'))

home_sales <- readRDS(url('https://gmubusinessanalytics.netlify.app/data/home_sales.rds')) %>%

select(-selling_date)Data

We will be working with the advertisting data set, where

each row represents a store from a large retail chain and their

associated sales revenue and advertising budgets, and the

home_sales data, where each row represents a real estate

home sale in the Seattle area between 2014 and 2015.

Take a moment to explore these data sets below.

Sales <dbl> | TV <dbl> | Radio <dbl> | Newspaper <dbl> | |

|---|---|---|---|---|

| 22.1 | 230.1 | 37.8 | 69.2 | |

| 10.4 | 44.5 | 39.3 | 45.1 | |

| 9.3 | 17.2 | 45.9 | 69.3 | |

| 18.5 | 151.5 | 41.3 | 58.5 | |

| 12.9 | 180.8 | 10.8 | 58.4 | |

| 7.2 | 8.7 | 48.9 | 75.0 | |

| 11.8 | 57.5 | 32.8 | 23.5 | |

| 13.2 | 120.2 | 19.6 | 11.6 | |

| 4.8 | 8.6 | 2.1 | 1.0 | |

| 10.6 | 199.8 | 2.6 | 21.2 |

selling_price <dbl> | city <fct> | house_age <dbl> | bedrooms <dbl> | bathrooms <dbl> | |

|---|---|---|---|---|---|

| 280000 | Auburn | 23 | 6 | 3.00 | |

| 487000 | Seattle | 9 | 4 | 2.50 | |

| 465000 | Seattle | 0 | 3 | 2.25 | |

| 411000 | Seattle | 7 | 2 | 2.00 | |

| 570000 | Bellevue | 16 | 3 | 2.50 | |

| 546000 | Bellevue | 16 | 3 | 2.50 | |

| 617000 | Bellevue | 16 | 3 | 2.50 | |

| 635000 | Kirkland | 9 | 3 | 2.50 | |

| 872750 | Redmond | 24 | 3 | 2.50 | |

| 843000 | Redmond | 24 | 3 | 2.50 |

Data Splitting

The first step in building regression models is to split our original data into a training and test set. We then perform all feature engineering and model fitting tasks on the training set and use the test set as an independent assessment of our model’s prediction accuracy.

We will be using the initial_split() function from

rsample to partition the advertising data into

training and test sets. Remember to always use set.seed()

to ensure your results are reproducible.

set.seed(314)

# Create a split object

advertising_split <- initial_split(advertising, prop = 0.75,

strata = Sales)

# Build training data set

advertising_training <- advertising_split %>%

training()

# Build testing data set

advertising_test <- advertising_split %>%

testing()

Model Specification

The next step in the process is to build a linear regression model object to which we fit our training data.

For every model type, such as linear regression, there are numerous

packages (or engines) in R that can be used.

For example, we can use the lm() function from base

R or the stan_glm() function from the

rstanarm package. Both of these functions will fit a linear

regression model to our data with slightly different

implementations.

The parsnip package from tidymodels acts

like an aggregator across the various modeling engines within

R. This makes it easy to implement machine learning

algorithms from different R packages with one unifying

syntax.

To specify a model object with parsnip, we must:

- Pick a model type

- Set the engine

- Set the mode (either regression or classification)

Linear regression is implemented with the linear_reg()

function in parsnip. To the set the engine and mode, we use

set_engine() and set_mode() respectively. Each

one of these functions takes a parsnip object as an argument and updates

its properties.

To explore all parsnip models, please see the documentation where you can search by keyword.

Let’s create a linear regression model object with the

lm engine. This is the default engine for most

applications.

lm_model <- linear_reg() %>%

set_engine('lm') %>% # adds lm implementation of linear regression

set_mode('regression')

# View object properties

lm_modelLinear Regression Model Specification (regression)

Computational engine: lm

Fitting to Training Data

Now we are ready to train our model object on the

advertising_training data. We can do this using the

fit() function from the parsnip package. The

fit() function takes the following arguments:

- a

parnsipmodel object specification - a model formula

- a data frame with the training data

The code below trains our linear regression model on the

advertising_training data. In our formula, we have

specified that Sales is the response variable and

TV, Radio, and Newspaper are our

predictor variables.

We have assigned the name lm_fit to our trained linear

regression model.

lm_fit <- lm_model %>%

fit(Sales ~ ., data = advertising_training)

# View lm_fit properties

lm_fitparsnip model object

Call:

stats::lm(formula = Sales ~ ., data = data)

Coefficients:

(Intercept) TV Radio Newspaper

2.90436 0.04597 0.18432 0.00237

Exploring Training Results

As mentioned in the first R tutorial, most model objects in

R are stored as specialized lists.

The lm_fit object is list that contains all of the

information about how our model was trained as well as the detailed

results. Let’s use the names() function to print the named

objects that are stored within lm_fit.

The important objects are fit and preproc.

These contain the trained model and pre-processing steps (if any are

used), respectively.

names(lm_fit)[1] "lvl" "spec" "fit" "preproc" "elapsed"

[6] "censor_probs"

To print a summary of our model, we can extract fit from

lm_fit and pass it to the summary() function.

We can explore the estimated coefficients, F-statistics, p-values,

residual standard error (also known as RMSE) and R2 value.

However, this feature is best for visually exploring our results on the training data since the results are not returned as a data frame. In the coming sections, we will explore numerous functions that can automatically extract this information from a linear regression results object.

Below, we use the pluck() function from

dplyr to extract the fitresults from

lm_fit. This is the same as using lm_fit$fit

or lm_fit[['fit']].

lm_fit %>%

# Extract fit element from the list

pluck('fit') %>%

# Pass to summary function

summary()

Call:

stats::lm(formula = Sales ~ ., data = data)

Residuals:

Min 1Q Median 3Q Max

-8.656 -0.902 0.247 1.249 2.801

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 2.90436 0.38649 7.51 5.5e-12 ***

TV 0.04597 0.00173 26.57 < 2e-16 ***

Radio 0.18432 0.01018 18.11 < 2e-16 ***

Newspaper 0.00237 0.00709 0.33 0.74

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 1.8 on 144 degrees of freedom

Multiple R-squared: 0.887, Adjusted R-squared: 0.884

F-statistic: 375 on 3 and 144 DF, p-value: <2e-16

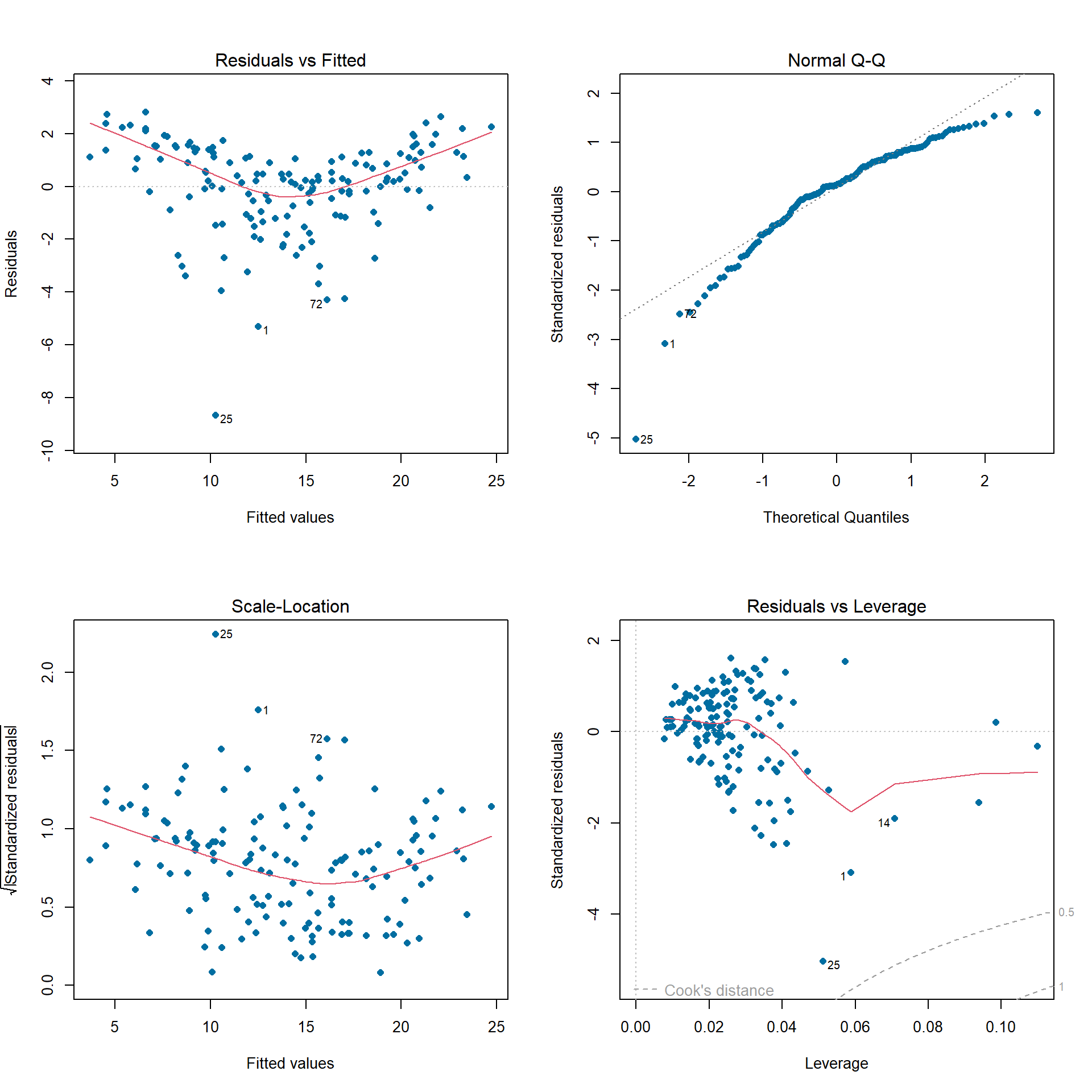

We can use the plot() function to obtain diagnostic

plots for our trained regression model. Again, we must first extract the

fit object from lm_fit and then pass it into

plot(). These plots provide a check for the main

assumptions of the linear regression model.

par(mfrow=c(2,2)) # plot all 4 plots in one

lm_fit %>%

pluck('fit') %>%

plot(pch = 16, # optional parameters to make points blue

col = '#006EA1')

Tidy Training Results

To obtain the detailed results from our trained linear regression

model in a data frame, we can use the tidy() and

glance() functions directly on our trained

parsnip model, lm_fit.

The tidy() function takes a linear regression object and

returns a data frame of the estimated model coefficients and their

associated F-statistics and p-values.

The glance() function will return performance metrics

obtained on the training data such as the R2 value

(r.squared) and the RMSE (sigma).

# Data frame of estimated coefficients

tidy(lm_fit)term <chr> | estimate <dbl> | std.error <dbl> | statistic <dbl> | p.value <dbl> |

|---|---|---|---|---|

| (Intercept) | 2.9044 | 0.3865 | 7.51 | 5.5e-12 |

| TV | 0.0460 | 0.0017 | 26.57 | 2.2e-57 |

| Radio | 0.1843 | 0.0102 | 18.11 | 6.0e-39 |

| Newspaper | 0.0024 | 0.0071 | 0.33 | 7.4e-01 |

# Performance metrics on training data

glance(lm_fit)r.squared <dbl> | adj.r.squared <dbl> | sigma <dbl> | statistic <dbl> | p.value <dbl> | df <dbl> | logLik <dbl> | AIC <dbl> | BIC <dbl> | deviance <dbl> | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.89 | 0.88 | 1.8 | 375 | 7.8e-68 | 3 | -292 | 594 | 609 | 449 |

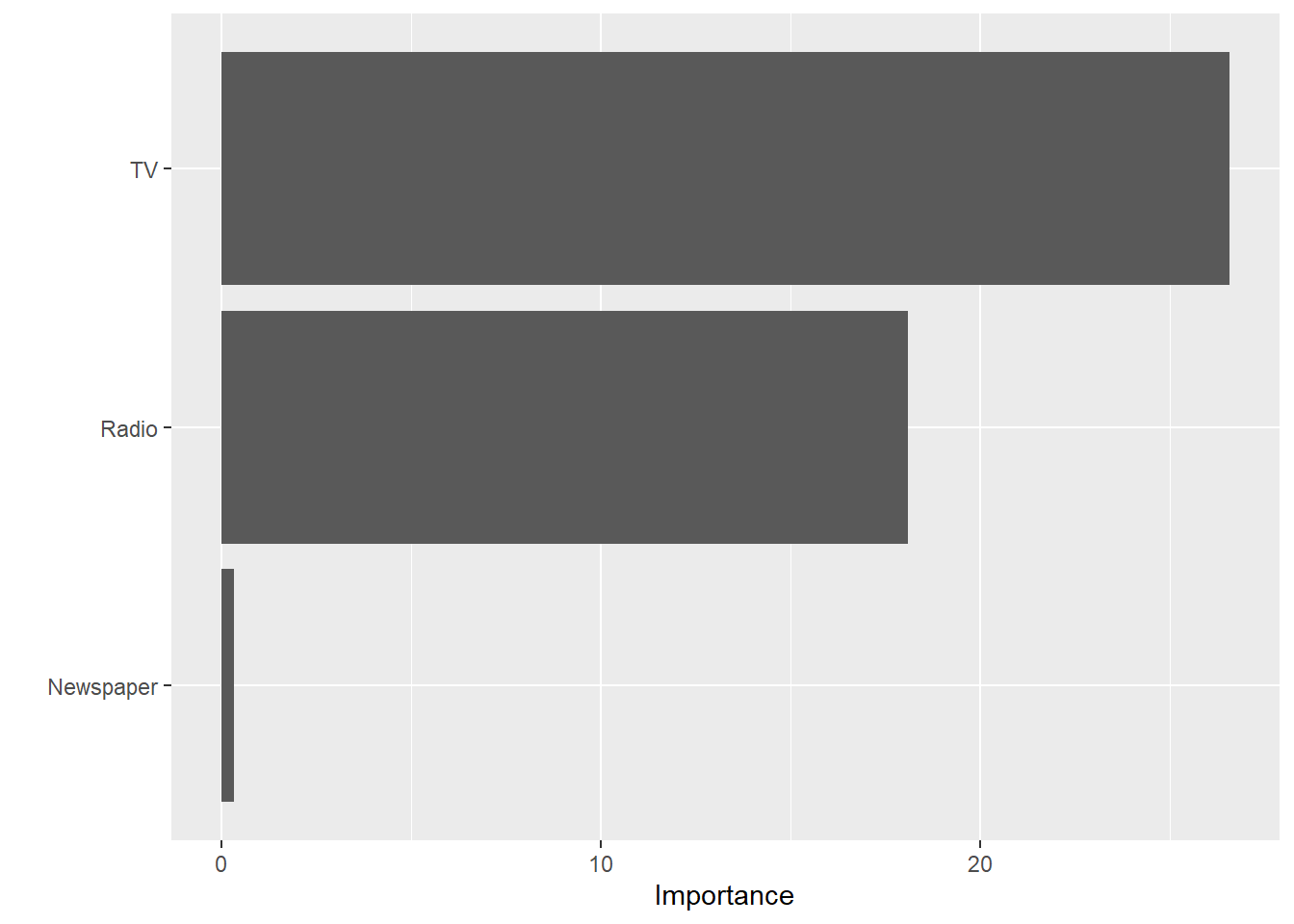

We can also use the vip() function to plot the variable

importance for each predictor in our model. The importance value is

determined based on the F-statistics and estimate coefficents in our

trained model object.

vip(lm_fit)

Evaluating Test Set Accuracy

To assess the accuracy of our trained linear regression model,

lm_fit, we must use it to make predictions on our test

data, advertising_test.

This is done with the predict() function from

parnsip. This function takes two important arguments:

- a trained

parnsipmodel object new_datafor which to generate predictions

The code below uses the predict function to generate a

data frame with a single column, .pred, which contains the

predicted Sales values on the

advertisting_test data.

predict(lm_fit, new_data = advertising_test).pred <dbl> | ||||

|---|---|---|---|---|

| 12.3 | ||||

| 13.3 | ||||

| 18.5 | ||||

| 6.6 | ||||

| 8.1 | ||||

| 14.9 | ||||

| 19.4 | ||||

| 21.7 | ||||

| 11.4 | ||||

| 23.3 |

Generally it’s best to combine the test data set and the predictions

into a single data frame. We create a data frame with the predictions on

the advertising_test data and then use

bind_cols to add the advertising_test data to

the results.

Now we have the model results and the test data in a single data

frame.

advertising_test_results <- predict(lm_fit, new_data = advertising_test) %>%

bind_cols(advertising_test)

# View results

advertising_test_results.pred <dbl> | Sales <dbl> | TV <dbl> | Radio <dbl> | Newspaper <dbl> |

|---|---|---|---|---|

| 12.3 | 9.3 | 17.2 | 45.9 | 69.3 |

| 13.3 | 12.9 | 180.8 | 10.8 | 58.4 |

| 18.5 | 19.0 | 204.1 | 32.9 | 46.0 |

| 6.6 | 5.6 | 13.2 | 15.9 | 49.6 |

| 8.1 | 9.7 | 62.3 | 12.6 | 18.3 |

| 14.9 | 15.0 | 142.9 | 29.3 | 12.6 |

| 19.4 | 18.9 | 248.8 | 27.1 | 22.9 |

| 21.7 | 21.4 | 292.9 | 28.3 | 43.2 |

| 11.4 | 11.9 | 112.9 | 17.4 | 38.6 |

| 23.3 | 25.4 | 266.9 | 43.8 | 5.0 |

Calculating RMSE and R2 on the Test Data

To obtain the RMSE and R2 values on our test set results,

we can use the rmse() and rsq() functions.

Both functions take the following arguments:

data- a data frame with columns that have the true values and predictionstruth- the column with the true response valuesestimate- the column with predicted values

In the examples below we pass our

advertising_test_results to these functions to obtain these

values for our test set. results are always returned as a data frame

with the following columns: .metric,

.estimator, and .estimate.

# RMSE on test set

rmse(advertising_test_results,

truth = Sales,

estimate = .pred).metric <chr> | .estimator <chr> | .estimate <dbl> | ||

|---|---|---|---|---|

| rmse | standard | 1.4 |

# R2 on test set

rsq(advertising_test_results,

truth = Sales,

estimate = .pred).metric <chr> | .estimator <chr> | .estimate <dbl> | ||

|---|---|---|---|---|

| rsq | standard | 0.93 |

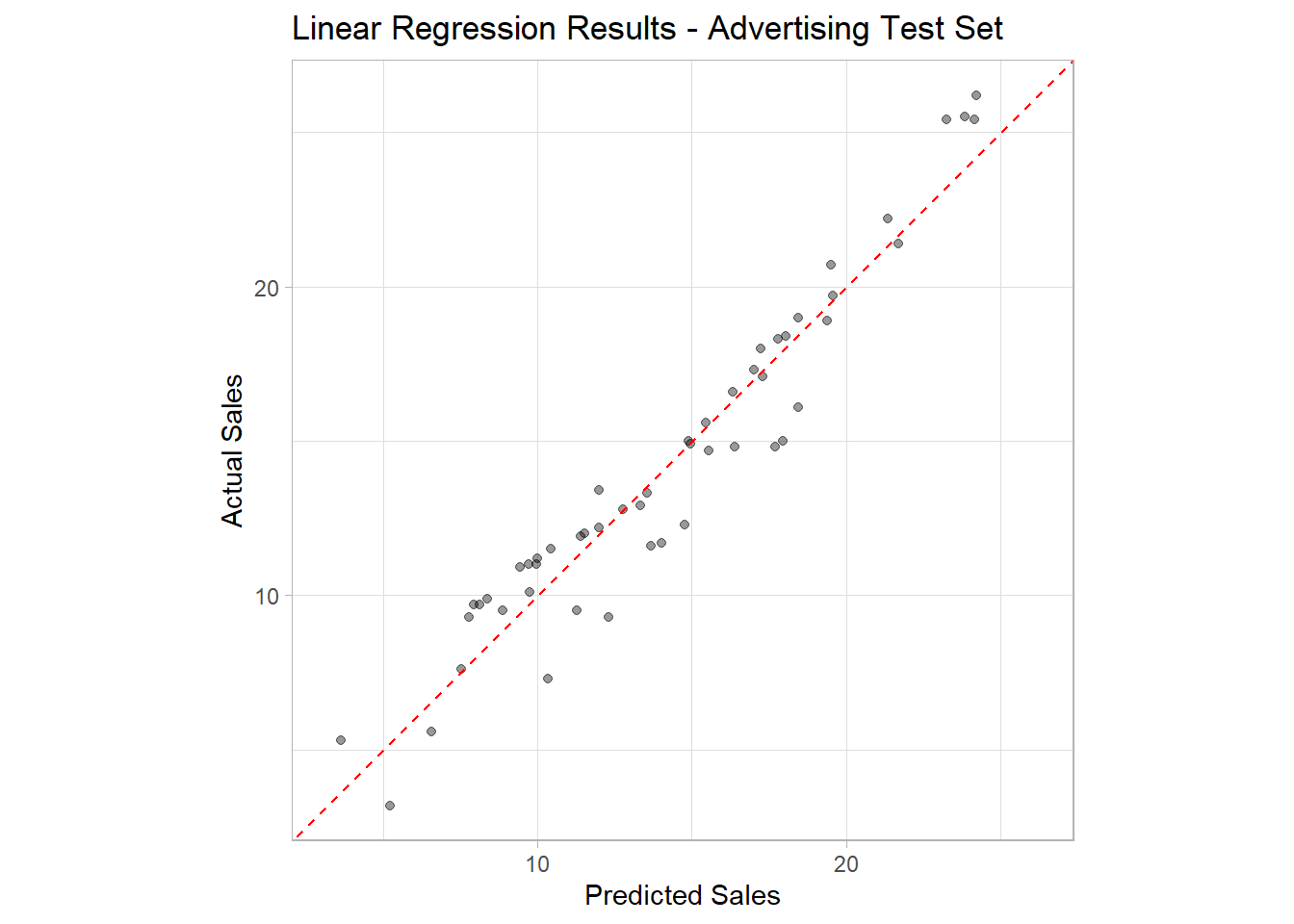

R2 Plot

The best way to assess the test set accuracy is by making an R2 plot. This is a plot that can be used for any regression model.

It plots the actual values (Sales) versus the model

predictions (.pred) as a scatter plot. It also plot the

line y = x through the origin. This line is a visually

representation of the perfect model where all predicted values are equal

to the true values in the test set. The farther the points are from this

line, the worse the model fit.

The reason this plot is called an R2 plot, is because the R2 is simply the squared correlation between the true and predicted values, which are plotted as paired in the plot.

In the code below, we use geom_point() and

geom_abline() to make this plot using out

advertising_test_results data. The

geom_abline() function will plot a line with the provided

slope and intercept arguments. The

coord_obs_pred() function scales the x and y axis to have

the same range for accurate comparisons.

ggplot(data = advertising_test_results,

mapping = aes(x = .pred, y = Sales)) +

geom_point(alpha = 0.4) +

geom_abline(intercept = 0, slope = 1, linetype = 2, color = 'red') +

coord_obs_pred() +

labs(title = 'Linear Regression Results - Advertising Test Set',

x = 'Predicted Sales',

y = 'Actual Sales') +

theme_light()

Creating a Machine Learning Workflow

In the previous section, we trained a linear regression model to the

advertising data step-by-step. In this section, we will go

over how to combine all of the modeling steps into a single

workflow.

We will be using the workflow package, which combines a

parnsip model with a recipe, and the

last_fit() function to build an end-to-end modeling

training pipeline.

Let’s assume we would like to do the following with the

advertising data:

- Split our data into training and test sets

- Feature engineer the training data by removing skewness and normalizing numeric predictors

- Specify a linear regression model

- Train our model on the training data

- Transform the test data with steps learned in part 2 and obtain predictions using our trained model

The machine learning workflow can be accomplished with a few

steps using tidymodels

Step 1. Split Our Data

First we split our data into training and test sets.

set.seed(314)

# Create a split object

advertising_split <- initial_split(advertising, prop = 0.75,

strata = Sales)

# Build training data set

advertising_training <- advertising_split %>%

training()

# Build testing data set

advertising_test <- advertising_split %>%

testing()

Step 2. Feature Engineering

Next, we specify our feature engineering recipe. In this step, we

do not use prep() or bake().

This recipe will be automatically applied in a later step using the

workflow() and last_fit() functions.

advertising_recipe <- recipe(Sales ~ ., data = advertising_training) %>%

step_YeoJohnson(all_numeric(), -all_outcomes()) %>%

step_normalize(all_numeric(), -all_outcomes())

Step 3. Specify a Model

Next, we specify our linear regression model with

parsnip.

lm_model <- linear_reg() %>%

set_engine('lm') %>%

set_mode('regression')

Step 4. Create a Workflow

The workflow package was designed to combine models and

recipes into a single object. To create a workflow, we start with

workflow() to create an empty workflow and then add out

model and recipe with add_model() and

add_recipe().

advertising_workflow <- workflow() %>%

add_model(lm_model) %>%

add_recipe(advertising_recipe)

Step 5. Execute the Workflow

The last_fit() function will take a workflow object and

apply the recipe and model to a specified data split object.

In the code below, we pass the advertising_workflow

object and advertising_split object into

last_fit().

The last_fit() function will then train the feature

engineering steps on the training data, fit the model to the training

data, apply the feature engineering steps to the test data, and

calculate the predictions on the test data, all in one

step!

advertising_fit <- advertising_workflow %>%

last_fit(split = advertising_split)

To obtain the performance metrics and predictions on the test set, we

use the collect_metrics() and

collect_predictions() functions on our

advertising_fit object.

# Obtain performance metrics on test data

advertising_fit %>% collect_metrics().metric <chr> | .estimator <chr> | .estimate <dbl> | .config <chr> | |

|---|---|---|---|---|

| rmse | standard | 1.41 | Preprocessor1_Model1 | |

| rsq | standard | 0.93 | Preprocessor1_Model1 |

We can save the test set predictions by using the

collect_predictions() function. This function returns a

data frame which will have the response variables values from the test

set and a column named .pred with the model

predictions.

# Obtain test set predictions data frame

test_results <- advertising_fit %>%

collect_predictions()

# View results

test_resultsid <chr> | .pred <dbl> | .row <int> | Sales <dbl> | .config <chr> |

|---|---|---|---|---|

| train/test split | 11.4 | 3 | 9.3 | Preprocessor1_Model1 |

| train/test split | 14.0 | 5 | 12.9 | Preprocessor1_Model1 |

| train/test split | 18.8 | 15 | 19.0 | Preprocessor1_Model1 |

| train/test split | 6.3 | 23 | 5.6 | Preprocessor1_Model1 |

| train/test split | 8.6 | 25 | 9.7 | Preprocessor1_Model1 |

| train/test split | 15.4 | 27 | 15.0 | Preprocessor1_Model1 |

| train/test split | 19.5 | 29 | 18.9 | Preprocessor1_Model1 |

| train/test split | 21.5 | 31 | 21.4 | Preprocessor1_Model1 |

| train/test split | 12.3 | 32 | 11.9 | Preprocessor1_Model1 |

| train/test split | 22.4 | 37 | 25.4 | Preprocessor1_Model1 |

Workflow for Home Selling Price

For another example of fitting a machine learning workflow, let’s use

linear regression to predict the selling price of homes using the

home_sales data.

For our feature engineering steps, we will include removing skewness

and normalizing numeric predictors, and creating dummy variables for the

city variable.

Remember that all machine learning algorithms need a numeric feature matrix. Therefore we must also transform character or factor predictor variables to dummy variables.

Step 1. Split Our Data

First we split our data into training and test sets.

set.seed(271)

# Create a split object

homes_split <- initial_split(home_sales, prop = 0.75,

strata = selling_price)

# Build training data set

homes_training <- homes_split %>%

training()

# Build testing data set

homes_test <- homes_split %>%

testing()

Step 2. Feature Engineering

Next, we specify our feature engineering recipe. In this step, we

do not use prep() or bake().

This recipe will be automatically applied in a later step using the

workflow() and last_fit() functions.

For our model formula, we are specifying that

selling_price is our response variable and all others are

predictor variables.

homes_recipe <- recipe(selling_price ~ ., data = homes_training) %>%

step_YeoJohnson(all_numeric(), -all_outcomes()) %>%

step_normalize(all_numeric(), -all_outcomes()) %>%

step_dummy(all_nominal(), - all_outcomes())As an intermediate step, let’s check our recipe by prepping it on the training data and applying it to the test data. We want to make sure that we get the correct transformations.

From the results below, things look correct.

homes_recipe %>%

prep(training = homes_training) %>%

bake(new_data = homes_test)house_age <dbl> | bedrooms <dbl> | bathrooms <dbl> | sqft_living <dbl> | sqft_lot <dbl> | sqft_basement <dbl> | floors <dbl> | |

|---|---|---|---|---|---|---|---|

| 1.022 | 3.07 | 0.965 | 0.2502 | 0.5042 | -0.54 | 0.056 | |

| 0.749 | -0.56 | 1.912 | 1.1318 | 0.5847 | 1.86 | 0.056 | |

| 0.841 | 0.72 | 0.468 | 1.0711 | 0.8294 | 1.87 | 0.056 | |

| 0.654 | -0.56 | -0.048 | 0.5156 | 0.4778 | -0.54 | 0.056 | |

| -0.418 | 0.72 | -0.048 | 1.9520 | 0.6702 | -0.54 | 0.056 | |

| -0.676 | 0.72 | 2.804 | 1.2212 | 0.4064 | -0.54 | 0.056 | |

| -0.418 | 0.72 | -0.048 | 1.7739 | 0.2538 | -0.54 | 0.056 | |

| -0.960 | -0.56 | -0.048 | -1.0459 | -0.6607 | -0.54 | 0.056 | |

| 1.450 | -0.56 | -0.048 | -0.5056 | 0.1787 | -0.54 | 0.056 | |

| 1.366 | 0.72 | -0.048 | -0.6610 | 0.1365 | -0.54 | 0.056 |

Step 3. Specify a Model

Next, we specify our linear regression model with

parsnip.

lm_model <- linear_reg() %>%

set_engine('lm') %>%

set_mode('regression')

Step 4. Create a Workflow

Next, we combine our model and recipe into a workflow object.

homes_workflow <- workflow() %>%

add_model(lm_model) %>%

add_recipe(homes_recipe)

Step 5. Execute the Workflow

Finally, we process our machine learning workflow with

last_fit().

homes_fit <- homes_workflow %>%

last_fit(split = homes_split)

To obtain the performance metrics and predictions on the test set, we

use the collect_metrics() and

collect_predictions() functions on our

homes_fit object.

# Obtain performance metrics on test data

homes_fit %>%

collect_metrics().metric <chr> | .estimator <chr> | .estimate <dbl> | .config <chr> | |

|---|---|---|---|---|

| rmse | standard | 9.3e+04 | Preprocessor1_Model1 | |

| rsq | standard | 7.5e-01 | Preprocessor1_Model1 |

We can save the test set predictions by using the

collect_predictions() function. This function returns a

data frame which will have the response variables values from the test

set and a column named .pred with the model

predictions.

# Obtain test set predictions data frame

homes_results <- homes_fit %>%

collect_predictions()

# View results

homes_resultsid <chr> | .pred <dbl> | .row <int> | selling_price <dbl> | .config <chr> |

|---|---|---|---|---|

| train/test split | 311720 | 1 | 280000 | Preprocessor1_Model1 |

| train/test split | 777525 | 13 | 795000 | Preprocessor1_Model1 |

| train/test split | 747544 | 14 | 835000 | Preprocessor1_Model1 |

| train/test split | 697076 | 18 | 738000 | Preprocessor1_Model1 |

| train/test split | 858351 | 40 | 885000 | Preprocessor1_Model1 |

| train/test split | 786019 | 42 | 785500 | Preprocessor1_Model1 |

| train/test split | 828050 | 47 | 815000 | Preprocessor1_Model1 |

| train/test split | 329904 | 50 | 330000 | Preprocessor1_Model1 |

| train/test split | 267021 | 53 | 268000 | Preprocessor1_Model1 |

| train/test split | 227552 | 54 | 270000 | Preprocessor1_Model1 |

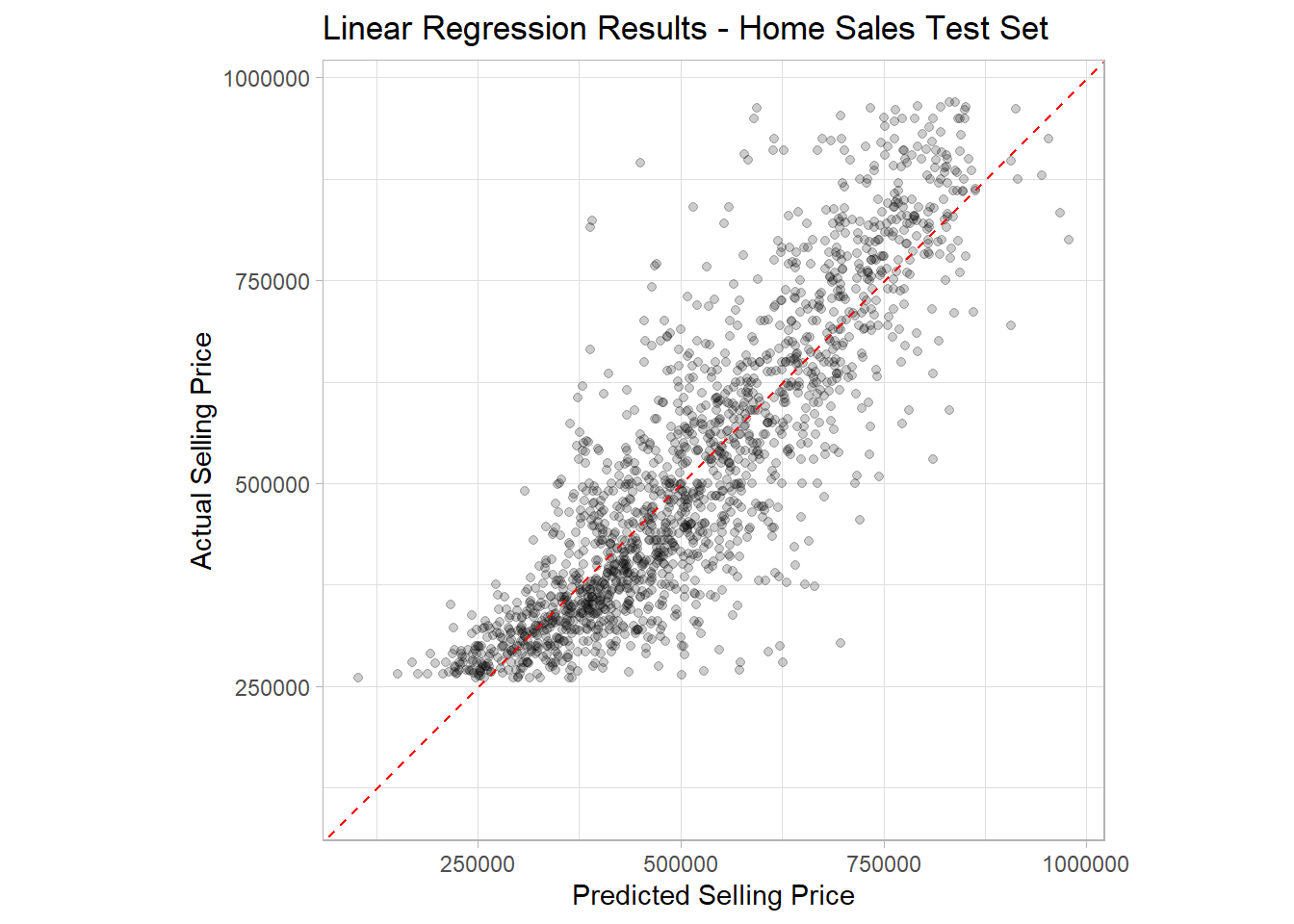

R2 Plot

Finally, let’s use the homes_results data frame to make

an R2 plot to visualize our model performance on the test

data set.

ggplot(data = homes_results,

mapping = aes(x = .pred, y = selling_price)) +

geom_point(alpha = 0.2) +

geom_abline(intercept = 0, slope = 1, color = 'red', linetype = 2) +

coord_obs_pred() +

labs(title = 'Linear Regression Results - Home Sales Test Set',

x = 'Predicted Selling Price',

y = 'Actual Selling Price') +

theme_light()

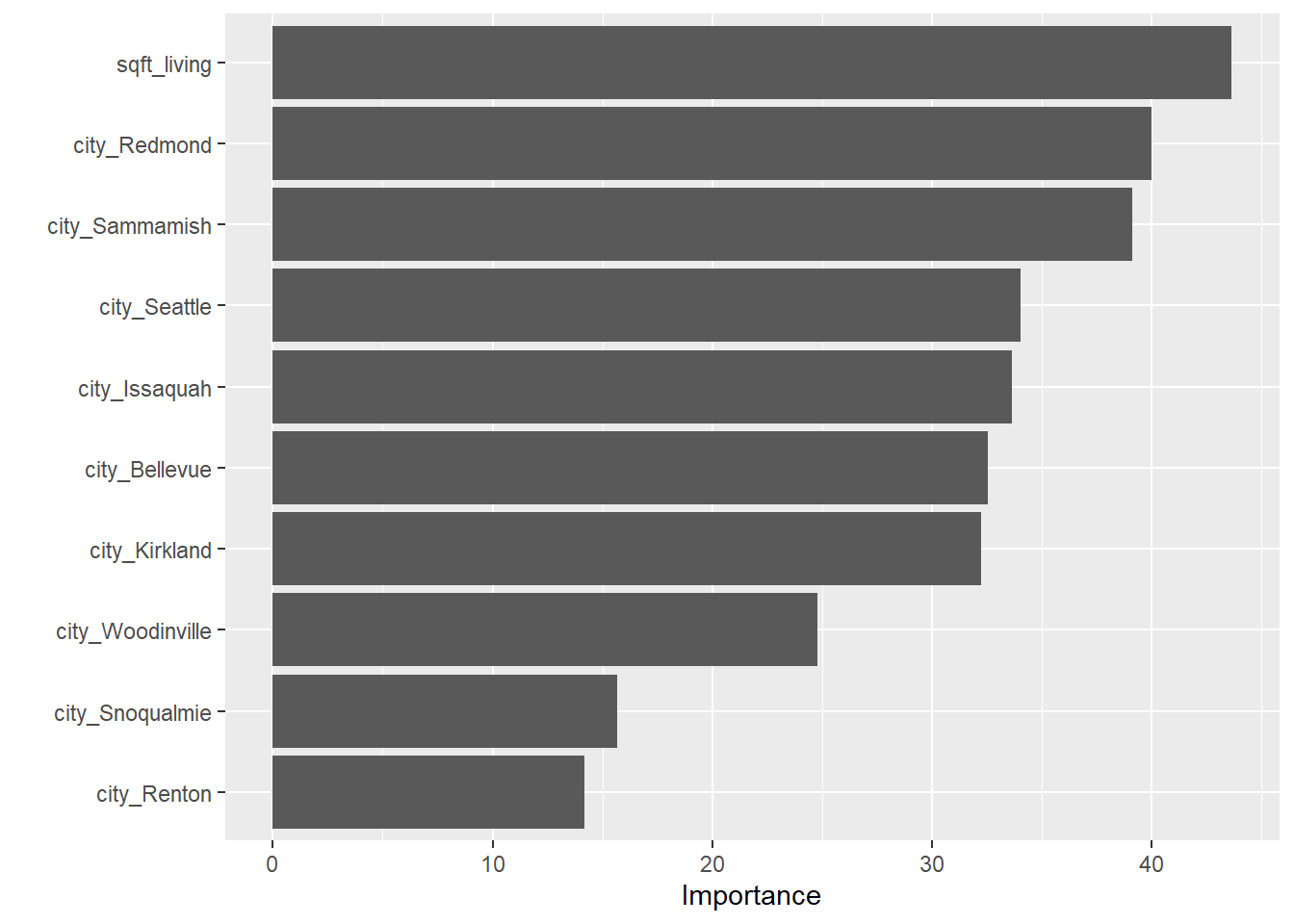

Variable Importance

Creating a workflow and using the last_fit() function is

a great option of automating a machine learning

pipeline. However, we are not able to explore variable importance on the

training data when we fit our model with last_fit().

To obtain a variable importance plot with vip(), we must

use the methods introduced at the beginning of the tutorial. This

involves fitting the model with the fit() function on the

training data.

To do this, we will train our homes_recipe and transform

our training data. Then we use the fit() function to train

our linear regression object, lm_model, on our processed

data.

Then we can use the vip() function to see which

predictors were most important.

homes_training_baked <- homes_recipe %>%

prep(training = homes_training) %>%

bake(new_data = NULL)

# View results

homes_training_bakedhouse_age <dbl> | bedrooms <dbl> | bathrooms <dbl> | sqft_living <dbl> | sqft_lot <dbl> | sqft_basement <dbl> | floors <dbl> | |

|---|---|---|---|---|---|---|---|

| -1.679 | 0.72 | -0.048 | -0.4452 | -0.30589 | -0.54 | 0.056 | |

| -1.116 | 0.72 | -0.048 | 0.5620 | -0.32551 | -0.54 | 0.056 | |

| 1.282 | 0.72 | -0.584 | -0.2698 | 0.26344 | -0.54 | 0.056 | |

| 0.258 | -0.56 | -0.048 | -0.3129 | 0.33013 | -0.54 | 0.056 | |

| -0.814 | 0.72 | -1.143 | 0.6645 | 0.46626 | -0.54 | -2.078 | |

| -1.116 | -0.56 | -0.584 | -1.2087 | -0.16007 | -0.54 | 0.056 | |

| -0.960 | -0.56 | -0.048 | -1.0459 | -0.64662 | -0.54 | 0.056 | |

| 1.022 | -0.56 | -0.584 | -0.9243 | 0.32370 | -0.54 | 0.056 | |

| 1.450 | -0.56 | -0.048 | -1.1173 | 0.25404 | 1.84 | -2.078 | |

| 1.197 | 0.72 | -0.048 | -0.2985 | 0.17794 | -0.54 | 0.056 |

Now we fit our linear regression model to the baked training data.

homes_lm_fit <- lm_model %>%

fit(selling_price ~ ., data = homes_training_baked)

Finally, we use vip() on our trained linear regression

model. It appears that square footage and location are the most

importance predictor variables.

vip(homes_lm_fit)

Copyright © David Svancer 2023 |